Publish or perish is the academic axiom that breeds anxiety, provides a road map and is internalized in decision-making. The emphasis on publication outputs has intensive with academic metrics that measure, rank and evaluate the performance of individuals and universities. While the metrics have plenty of critics, for institutional or policy projects seem poised to break them. A new plan in the Netherlands might be the start of a change. But it will be hard to get traction.

Breaking the tyranny of metrics?

Utrecht University in the Netherlands recently announced that it would be changing the way it evaluates faculty and academic staff for hiring and promotion decisions and evaluating academic departments. Nature reported the change at the end of June. According to the Nature story:

By early 2022, every department at Utrecht University in the Netherlands will judge its scholars by other standards, including their commitment to teamwork and their efforts to promote open science …

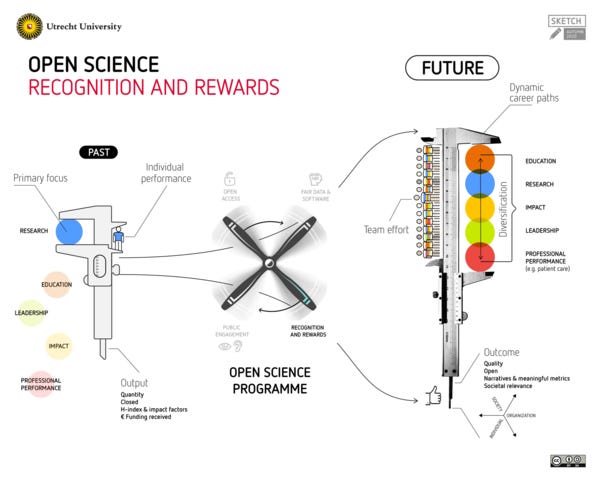

The new plan to assess academic work is charted by a Recognition and Reward project (errr, so far as I can tell this is a committee, universities love committees) at Utrecht which is part of a wider Open Science initiative that seeks to “promote open science as part of its promise to make science more open and even more reliable, efficient and relevant to society.”

Under the proposed system, individual performance, including the H-index will be abandoned for less defined, less individualistic assessments designed to promote open science and social responsiveness.

Aside - what is an H-index? Good question. The H-index is a metric that represents researcher accomplishments in a single number that is equal to n publications cited at least n times (if my 10 most cited papers have at least 10 citations, but my 11th most cited publication has 9 citations, my H-index is 10). Some people are obsessed with the H-index and other metrics. Seriously, they are.

Anyway, below is how Utrecht is representing its new initiative. Yeah, I know.

Why does the Utrecht announcement matter?

Well, I took note for a couple of reasons. Utrecht is a major research university, so this is kind of a big deal because an incumbent actor (a privileged player in the game) is making a significant break. Utrecht is a member of the League of European Universities, which is a network of 23 leading research universities in Europe. It’s the rough equivalent of the American Association of Universities (AAU) in North America. It’s a big producer of metric-able research. Utrecht ranks 79th in the most recent CWTS Leiden Rankings (I know, using metrics in a post on busting metrics, brah), which is a field normalized fractional count index of publication outputs (just ignore that, it’s a ranking, ok). That puts Utrecht in the neighborhood of UT Austin, Berkeley, and NYU in terms of research outputs. Utrecht ranks 9th, - TOP TEN - in Europe. So, you see, as far as global research universities go, Utrecht is a BFD, and an even B-iggerFD in the European context.

Another thing that caught my attention about Utrecht’s announcement is the direct language used by Recognition and Reward project’s director (I think the Dutch are known for being a little bit direct, but I shouldn’t dabble in stereotypes). Quoted in Nature, Professor Dr. Boselie said that metric evaluations are leading to the “productification” of science. Boselie also said of metic-based assessment:

It has become a very sick model that goes beyond what is really relevant for science and putting science forward.

That is a pretty strong indictment of the current system. But the critique will be familiar to those who have concerns over how the publish or perish system in academia prioritizes quantity over quality and the competitive atmosphere of individualism and self-promotion in academic life (see also, academic Twitter).

The big question: will it work?

Boselie admits the new system will be hard to implement. Each department will have to come up with its own evaluation system, and if you know academics, you know that will NOT be an easy process. Even if it does take hold in Utrecht, will it spread and overthrow the tyranny of metrics? Let’s consider that question.

Where could change occur?

When I read about the Utrecht move, my first thought was, I wonder what allowed them to break away? I started to think about other academic systems that have a large presence in global science. Could something like this have happened elsewhere?

How about in the USA? Probably not.

Interestingly, world rankings and other research-based public metrics are not as influential in the US as they are in other countries. And the major US ranking system, US News and World Report, doesn’t measure research directly. When Barrett Taylor and I were working on our book Unequal Higher Education, I noticed a difference between the way people from the US and other parts of the world responded to our work. The book uses a latent variable analysis to categorize colleges and universities in the US. Every non-US audience asked us why we didn’t include a research variable in our model, and no US audience asked that question. The simple answer is the same for US News. We didn’t have to. Research largely covaries with other measures like admission selectivity and resource intensity, so other measures capture it without observing it directly. So far as I know, few academic departments or universities maintain explicit guidance on using metrics like H-index for making hiring and promotion decisions. However, such criteria are absolutely informally used.

Given the indirect use of research in public metrics and formal procedures, maybe the US is a good place to break out of the metrics rat race? Nope. Probably not. Publish or perish remains the standard for academics are research universities and at not-quite research universities in the US. And the AAU is a powerful anchor. AAU indicators prioritize research metrics, like a lot. Check them out. Marginal AAU members fear losing membership (which happened not too long ago to the University of Nebraska and Syracuse). Major-research universities on the cusp aspire to membership. All other universities with a research mission or pretenses just try to tread water and stay in the pool (my metaphors are dreadful). Groups like the University Innovation Alliance promote collaboration but focus on undergraduate education and don’t threaten research competition. Arizona State’s President Michael Crow advocates for a New American University and Fifth Wave University, which shares some ideas with the Utrecht plan. However, a skeptic might say that Crow’s model reflects the inability of upstart public universities to keep up with the most established research universities rather than a force that can disrupt the model that benefits the incumbent universities. ASU is not an AAU member, after all.

How about China? Probably not.

China is in the midst of a massive research catch-up project that began in earnest in the 1990s. The current program, the Double World-Class Project is designed to bolster China’s portion. It focuses on academic disciplines and Chinese universities on the world stage. The plan has a long time horizon, to 2050, and with previous investments in research, it seems to be working. As a result, Chinese science is taking off and now produces more academic papers than any other country.

Metrics are a big part of Chinese higher education, and that is probably not going to change soon. Along with the Double World-Class Project, the Belt and Road foreign policy feature higher education components. Both seek to expand China’s cultural as well as economic influence and cement its status as a superpower. I am not an expert on Chinese higher education, but I don’t see a moment to break from metrics emerging from Beijing.

How about some of the larger European countries like Germany, France, or Italy? Probably not.

To be fair, these countries are not at the top of my mind when I think of competition in university rankings or academic metrics. This is partly because a lot of work happens in national languages, which is less recognized by the metrics, though most science papers are in English no matter where the researchers are from. My understanding is that the French are a bit upset about their lackluster performance in world rankings (see this example; anyway, this is my impression, I don’t have deep insights here), but the Germans are less concerned (again, my impression). Italian higher education remains somewhat of a mystery to me (probably I shouldn’t admit this). These systems have not adopted the American research university model, which has been taken up more or less in China and much of the world. They don’t seem well-positioned to implement and propagate an organizational innovation like the one proposed in Utrecht. I could well be wrong. Let me know if I am and why.

How about the UK or Australia? Probably not.

Australia’s higher education system is facing deep and painful cuts. The cuts have the potential to weaken the sector for a while. And besides Australian research funding prioritizes metric outputs. As the government research funding page explains, “Research performance is also often a basis for academic hiring and promotion, acting as an incentive (beyond inherent motivation) for individual researchers to maintain research activity.” Seems an unlikely candidate to bust out of the global metrics system.

Same for the UK, though it’s not getting the same hit Australia is. But the Research Excellence Framework is a hyper-competitive periodic evaluation of research performance at the department level that determines research funding for nearly a decade. The REF is underway now for the first time since 2014. This system locks the UK into metrics attention for the foreseeable future, I think.

Others? I don’t know, probably not. This is not an exhaustive analysis.

So what’s the point?

If Utrecht is able to successfully implement a policy of excluding research metrics like impact factors, H-indexes, and grant Euros from hiring and promotion decisions, it would seem distinctly positioned. Utrecht would seem secure in its position to take the risk and free to do it because of governance arrangements that give universities autonomy and funding structures that seemingly allow it to make a move.

Burton Clark’s famous triangular coordination model positions higher education between controlling forces. Simon Marginson summarized the model this way in an open-access book.

Clark locates three Weberian ideal types at the points of the triangle: systems driven by states, systems driven by market forces, and systems driven by academic oligarchies. He positions each national higher education system within the triangle, with the United States closest to market coordination, Soviet Russia closest to state control, Italy closest to academic oligarchy, and so on.

The model is imperfect and needs updating (well, it’s been updated a million times, so maybe not?), but it points to a real challenge in how to assess academic work and distribute opportunities and rewards. Over the past several decades, direct state control of higher education has given way to more autonomous forms of governance. Even in party-state China, which features strong central planning, the system is too large and complex for direct control by the central government, though the party plays a large role in university governance. At the same time, the academic oligarchy (control of the university by professors) is seen as increasingly unacceptable by society and by the non-professor academics in the systems where professors have the most power. Most societies want some type of social responsiveness from higher education and do not accept that professors can occupy the university for themselves. Even if we worry about contingency and a weakening academic profession, which I do worry about, I think it’s also untenable to say that the faculty have no responsibility to anyone other than other professors, even if we vigorously defend academic freedom. With the state steering higher education from more of a distance and the academic oligarchy unable to retain supreme control in many systems, “the market,” or something market adjacent, has taken a stronger role in system steering. And that is where metrics madness comes in.

Metrics also work because they tap into individual academics’ sense of competition and drive. Even critical academics who are vigorously opposed to neoliberal evaluations themselves are often individually ambitious and want to influence their peers. Academics, we are a vain lot, we want recognition (I mean, come on, I am writing this thing at 10:00 PM on a holiday because why? Partly because I like the sound of my own keyboard clacking away).

So now I appear to be drifting … so, let’s try to tie this thing up. Here is how I think Utrecht’s model might work. If departments are able to establish consensus methods of evaluating individual and collective work based on the standards of openness and social responsiveness that value teaching and research, and public engagement and service, then good for them. If they are able to get enough good action from other academics, and media, and the government that they can persuade other universities to do the same, then the approach could spread in Northern Europe to start. How it gets beyond the region is a sticking point. I think they’d have to re-set worldwide cultural expectations about academia. To do that, I think funding and reward structures would have to change to reflect and encoring a shifting culture. Maybe it’s possible in this super vague model I have outlined. Maybe. But inertia is strong.

Ok, enough.

A favor, please.

If you read all the way to this point, then you probably liked or hated what I had to say (the internet has lots of hate reading). Either way, please consider subscribing to my newsletter blog thing-y and sharing this post on social media. Cheers!